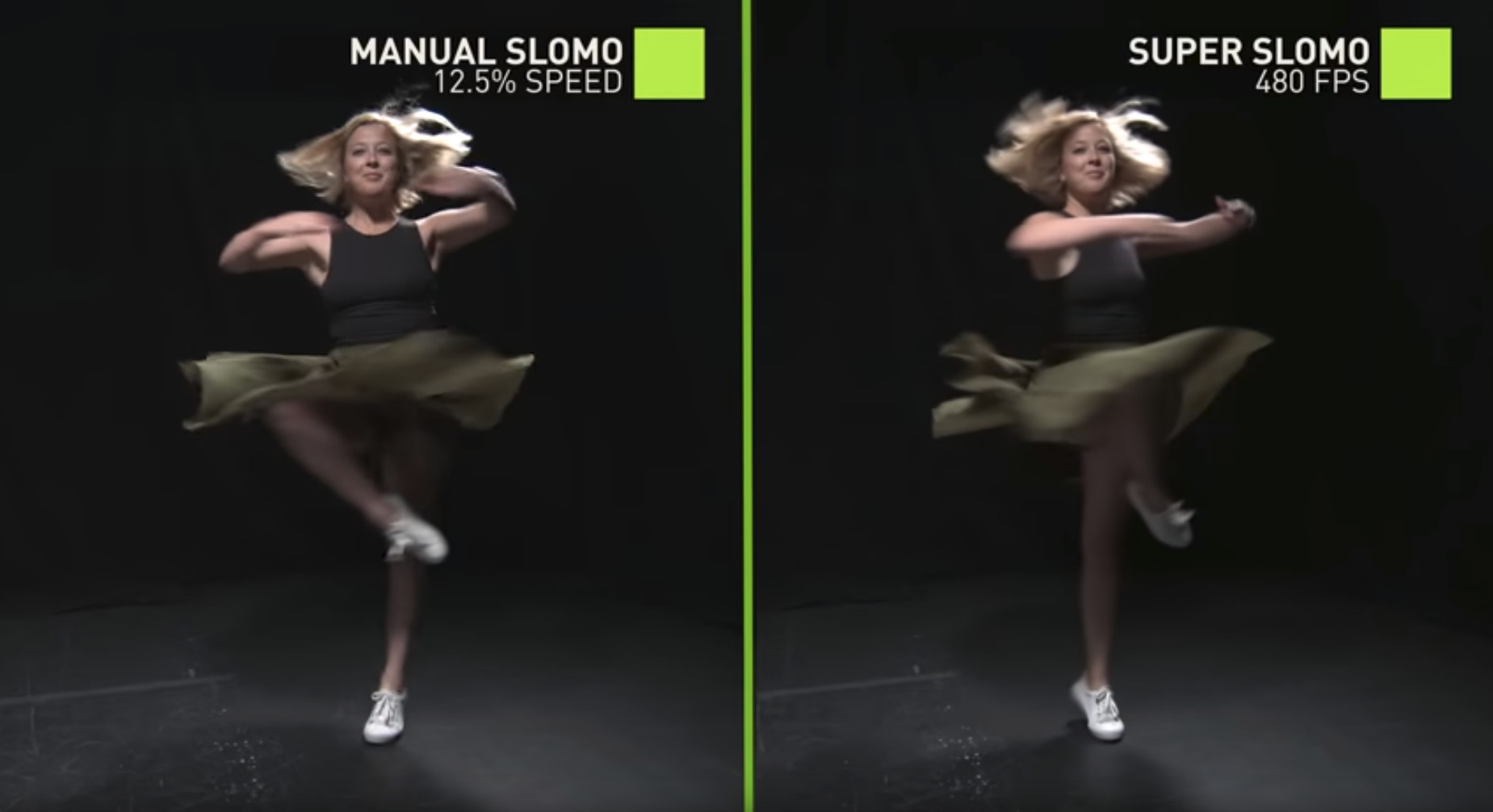

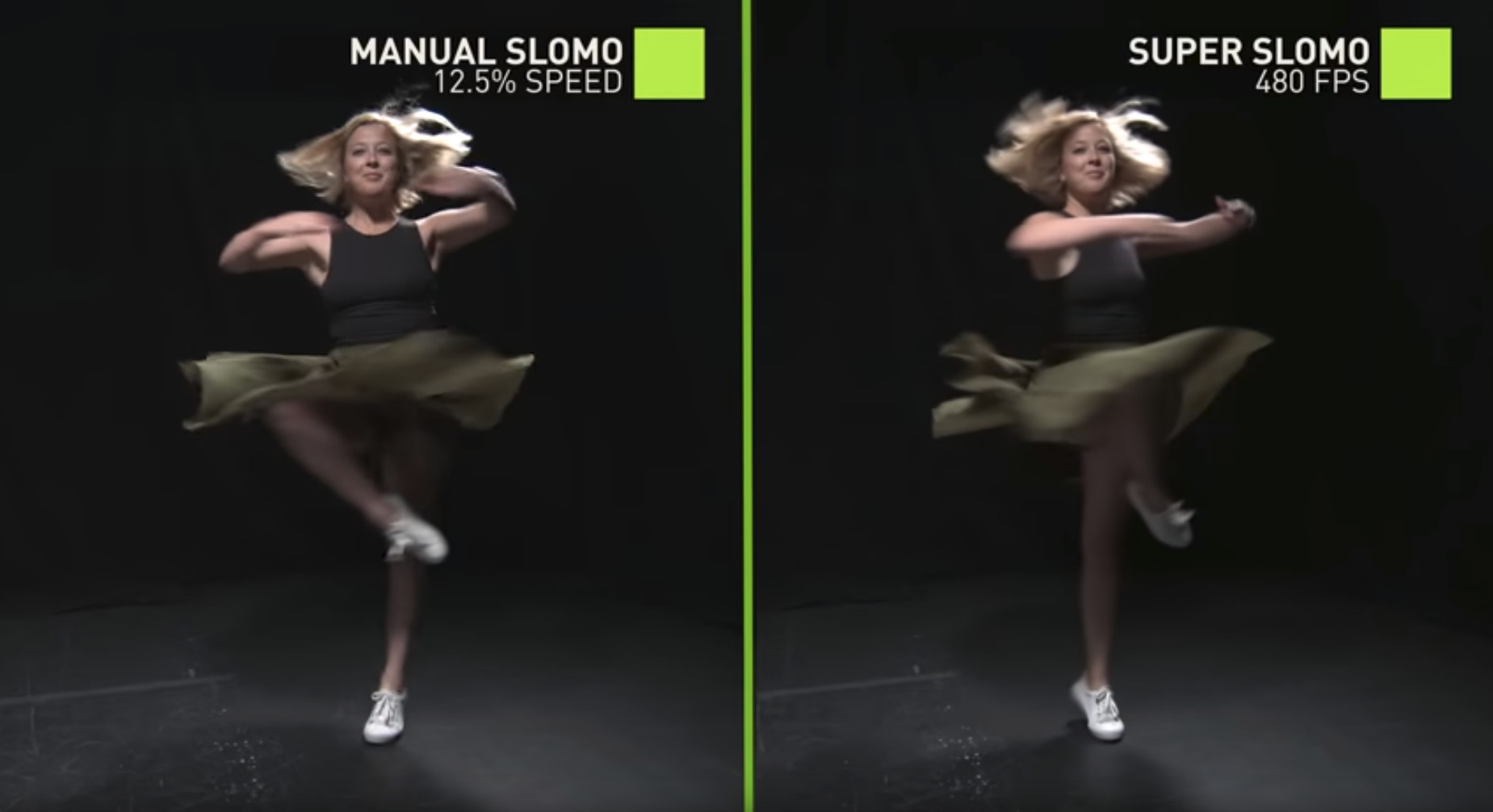

The AI for Content Creation workshop (AICCW) at CVPR 2020 brings together researchers in computer vision, machine learning, and AI. Content creation has several important applications ranging from virtual reality, videography, gaming, and even retail and advertising. The recent progress of deep learning and machine learning techniques allowed to turn hours of manual, painstaking content creation work into minutes or seconds of automated work. For instance, generative adversarial networks (GANs) have been used to produce photorealistic images of items such as shoes, bags, and other articles of clothing, interior/industrial design, and even computer games' scenes. Neural networks can create impressive and accurate slow-motion sequences from videos captured at standard frame rates, thus side-stepping the need for specialized and expensive hardware. Style transfer algorithms can convincingly render the content of one image with the style of another, offering unique opportunities for generating additional and more diverse training data---in addition to creating awe-inspiring, artistic images. Learned priors can also be combined with explicit geometric constraints, allowing for realistic and visually pleasing solutions to traditional problems such as novel view synthesis, in particular for the more complex cases of view extrapolation.

AI for content creation lies at the intersection of the graphics, the computer vision, and the design community. However, researchers and professionals in these fields may not be aware of its full potential and inner workings. As such, the workshop is comprised of two parts: techniques for content creation and applications for content creation. The workshop has three goals:

More broadly, we hope that the workshop will serve as a forum to discuss the latest topics in content creation and the challenges that vision and learning researchers can help solve.

Welcome!

- Deqing Sun, Ming-Yu Liu, Lu Jiang, James Tompkin, Weilong Yang, and Kalyan Sunkavalli.

Click ▶ to jump to each talk!

| Play | Author | Title | Links | Video |

|---|---|---|---|---|

| Deqing Sun | Introduction | 🔗 | ||

| Invited Speakers | ||||

| Phillip Isola | Generative Models as Data++ | 🔗 | ||

| Angjoo Kanazawa | Generating 3D Content from Images | 🔗 | ||

| Irfan Essa | AI (CV/ML) for Content Creation | 🔗 | ||

| Justin Johnson | Generating 3D Content from 2D Supervision | 🔗 | ||

| Papers: Session 1 | ||||

| Tristan Sylvain (Mila); Pengchuan Zhang (Microsoft Research AI); Yoshua Bengio (Mila); R Devon Hjelm, Shikhar Sharma (Microsoft Research) | Object-centric Image Generation from Layouts Extended abstract |

[PDF] | 🔗 | |

| Yuchuan Gou (paii-labs.com); Qiancheng Wu (University of California, Berkeley); Minghao Li, Bo Gong, Mei Han (paii-labs.com) | SegAttnGAN: Text to Image Generation with Segmentation Attention Extended abstract |

[arXiv] | 🔗 | |

| Junbum Cha, Sanghyuk Chun, Gayoung Lee, Bado Lee, Seonghyeon Kim, Hwalsuk Lee (Clova AI Research, NAVER Corp.) | Towards High-quality Few-shot Font Generation with Dual Memory Attention Extended abstract |

[PDF] | 🔗 | |

| Sangwoo Mo (KAIST); Minsu Cho (POSTECH); Jinwoo Shin (KAIST) | Freeze the Discriminator: A Simple Baseline for Fine-tuning GANs Extended abstract |

[arXiv] | 🔗 | |

| Yuheng Li, Krishna Kumar Singh, Utkarsh Ojha, Yong Jae Lee (University of California, Davis) | MixNMatch: Multifactor Disentanglement and Encoding for Conditional Image Generation Paper (also at CVPR 2020) |

[PDF] [arXiv] [Project Webpage] | 🔗 | |

| Yujun Shen, Bolei Zhou (CUHK) | Interpreting the Latent Space of GANs for Semantic Face Editing Paper (also at CVPR 2020) |

[arXiv] [Project Webpage] [Extended Abstract PDF] | 🔗 | |

| Rameen Abdal, Peter Wonka (KAUST); Yipeng Qin (Cardiff University) | Image2StyleGAN++: How to Edit the Embedded Images? Paper (also at CVPR 2020) |

[arXiv] [Video (1 min)] | 🔗 | |

| Peihao Zhu, Rameen Abdal (KAUST); Yipeng Qin (Cardiff University); Peter Wonka (KAUST) | SEAN: Image Synthesis with Semantic Region-adaptive Normalization Paper (also at CVPR 2020) |

[arXiv] [Project Webpage] | 🔗 | |

Click ▶ to jump to each talk!

| Play | Author | Title | Links | Video |

|---|---|---|---|---|

| James Tompkin | Introduction | 🔗 | ||

| Invited Speakers | ||||

| Sanja Fidler | AI for 3D Content Creation | 🔗 | ||

| Aaron Hertzmann | What if CVPR is a Graphics Conference? | 🔗 | ||

| Ying Cao | Data-driven Graphic Design: Bringing AI into Graphic Design | 🔗 | ||

| Ming-Hsuan Yang | Learning to Synthesize Image and Video Contents | 🔗 | ||

| Papers: Session 2 | ||||

| Patrick Esser, Robin Rombach, Bjorn Ommer (Heidelberg University) | Network Fusion for Content Creation with Conditional INNs Paper |

[arXiv] [Webpage] | 🔗 | |

| Tianhao Zhang, Lu Jiang (Google Research); Weilong Yang (Google Inc.) | Text-guided Image Manipulation via Local Feature Editing Paper |

[PDF] | 🔗 | |

| Kuniaki Saito, Kate Saenko (Boston University); Ming-Yu Liu (NVIDIA) | COCO-FUNIT: Few-shot Unsupervised Image Translation with a Content-conditioned Style Encoder Extended abstract |

[PDF] | 🔗 | |

| Hung-Yu Tseng, Hsin-Ying Lee (University of California, Merced); Lu Jiang (Google Research); Weilong Yang (Google Inc.); Ming-Hsuan Yang (University of California at Merced) | RetrieveGAN: Image Synthesis via Differentiable Patch Retrieval Extended abstract |

[PDF] | 🔗 | |

| Yunjey Choi, Youngjung Uh (Clova AI Research, NAVER Corp.); Jaejun Yoo (EPFL); Jung-Woo Ha (Clova AI Research, NAVER Corp.) | StarGAN v2: Diverse Image Synthesis for Multiple Domains Paper (also at CVPR2020) |

[arXiv] [Project Webpage] | 🔗 | |

| David Stap, Maartje A ter Hoeve, Sarah Ibrahimi (University of Amsterdam) | Conditional Image Generation and Manipulation for User-specified Content Paper |

[arXiv] | 🔗 | |

| Hanxiang Hao, Sriram Baireddy, Amy R. Reibman, Edward Delp (Purdue University) | FaR-GAN for One-shot Face Reenactment Paper |

[arXiv] | 🔗 | |

| Youssef Alami Mejjati (University of Bath); Zejiang Shen, Michael Snower, Aaron Gokaslan (Brown University); Oliver Wang (Adobe Systems Inc); James Tompkin (Brown University); Kwang In Kim (UNIST) | Generating Object Stamps Extended abstract |

[PDF] [arXiv] | 🔗 | |

| Qingyuan Zheng (University of Maryland, Baltimore County); Zhuoru Li (Project HAT); Adam Bargteil (University of Maryland, Baltimore County) | Learning to Shadow Hand-drawn Sketches Paper (also at CVPR2020) |

[arXiv] [Project Webpage] | 🔗 | |

| Shan-Jean Wu, Chih-Yuan Yang, Jane Yung-jen Hsu (National Taiwan University) | CalliGAN: Style and Structure-aware Chinese Calligraphy Generator Paper |

[arXiv] [Code] | 🔗 | |

| Surgan Jandial* (IIT Hyderabad); Ayush Chopra* (Media and Data Science Research Lab, Adobe); Balaji Krishnamurthy* (Media and Data Science Research Lab, Adobe); Kumar Ayush (Stanford University); Mayur Hemani (Media and Data Science Research Lab, Adobe); Abhijeet Halwai (Microsoft Research) | SieveNet: A Unified Framework for Image Based Virtual Try-On Paper (also at WACV2020) |

[arXiv] | 🔗 | |

| Closing remarks | 🔗 | |||