GaussiGAN: Controllable Image Synthesis with 3D Gaussians from Unposed Silhouettes

Youssef A. Mejjati, Isa Milefchik, Aaron Gokaslan, Oliver Wang, Kwang In Kim, James Tompkin

BMVC 2021 +

CVPRW AI for Content Creation 2021

Abstract

We present an algorithm to reconstruct a coarse representation of objects from unposed multi-view 2D mask supervision. Our approach learns to represent object shape and pose with a set of self-supervised canonical 3D anisotropic Gaussians, via a perspective camera and a set of per-instance transforms. We show that this robustly estimates a 3D space for the camera and object, while recent state-of-the-art voxel-based baselines struggle to reconstruct either masks or textures in this setting. We show results on synthetic datasets with realistic lighting, and demonstrate an application of object insertion. This helps move towards structured representations that handle more real-world variation in learned object reconstruction.

BMVC (10 pages) CVPRW (4 pages) arXiv (8+ pages) |

BMVC Supplemental Paper + Video |

Code + data + models |

Interactive app (Flask) |

Video MP4

GaussiGAN presentation

(10 mins, YouTube) @ AI for Content Creation @ CVPR 2021

Bibtex

@inproceedings{mejjati2021gaussigan,

author = {Youssef A. Mejjati and Isa Milefchik and Aaron Gokaslan and Oliver Wang and Kwang In Kim and James Tompkin},

title = {GaussiGAN: Controllable Image Synthesis with 3D Gaussians from Unposed Silhouettes},

booktitle = {British Machine Vision Conference (BMVC)},

month = {Nov},

year = {2021},

}

Generating Object Stamps—Precursor in 2D

Youssef A. Mejjati, Zejiang Shen, Michael Snower, Aaron Gokaslan, Oliver Wang, James Tompkin, Kwang In Kim

CVPR Workshop on AI for Content Creation (AICC) 2020

(4-page extended abstract)

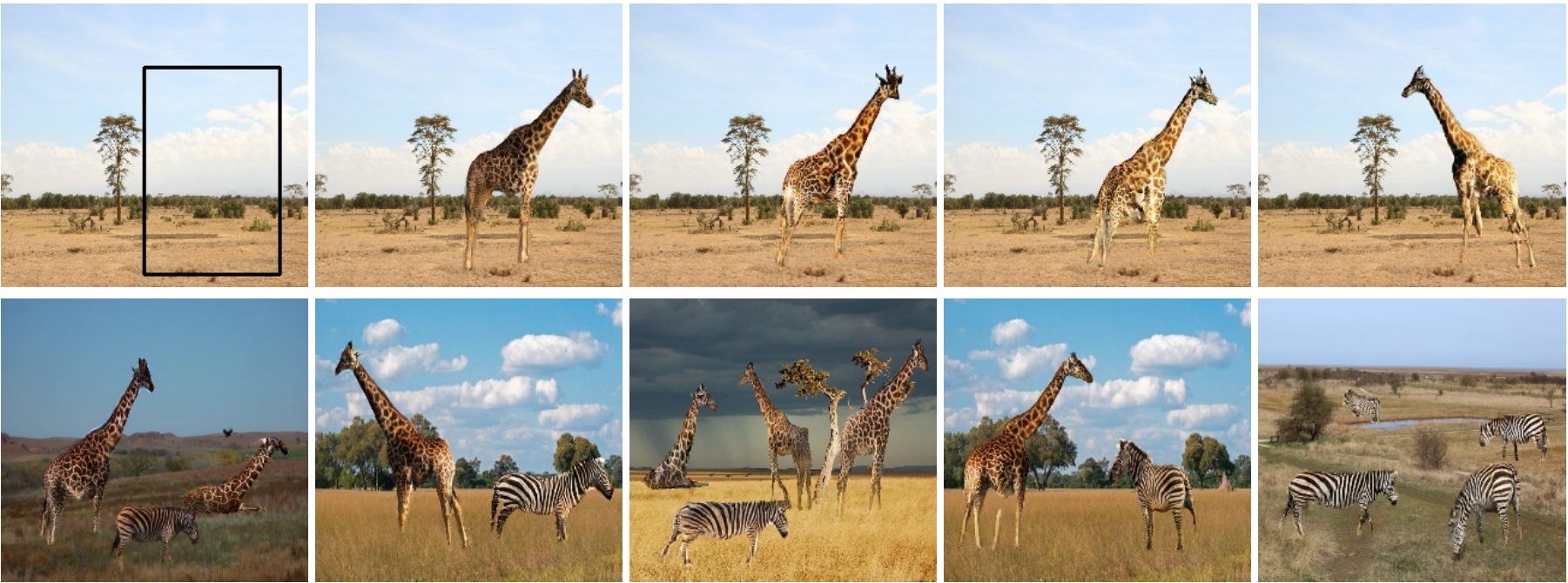

Top row: Given a user-provided background image, object class (giraffe), and bounding box (far left), our method generates objects with diverse shapes and textures (right). Bottom row: We combine multiple object classes across scenes and match illumination.

Abstract

We present an algorithm to generate diverse foreground objects and composite them into background images using a GAN architecture. Given an object class, a user-provided bounding box, and a background image, we first use a mask generator to create an object shape, and then use a texture generator to fill the mask such that the texture integrates with the background. By separating the problem of object insertion into these two stages, we show that our model allows us to improve the realism of diverse object generation that also agrees with the provided background image. Our results on the challenging COCO dataset show improved overall quality and diversity compared to state-of-the-art object insertion approaches.

|

|

|

Paper—arXiv (8-pages + supplemental) |

Code |

Bibtex

@inproceedings{mejjati2020objectstamps,

author = {Youssef A. Mejjati and Zejiang Shen and Michael Snower and Aaron Gokaslan and Oliver Wang and James Tompkin and Kwang In Kim},

title = {Generating Object Stamps},

booktitle = {Computer Vision and Pattern Recognition Workshop on AI for Content Creation (CVPRW)},

month = {June},

year = {2020},

}

Related projects

- Unsupervised Attention-guided Image-to-image Translation (NeurIPS 2018) | Code on Github

- Improving Shape Deformation in Unsupervised Image-to-image Translation (ECCV 2018) | Code on Github

Related presentations

| Learning Controls through Structure (60 mins, YouTube) @ 2D3DAI 2021-04-19 |

Deep Learning for Content Creation Tutorial (30 mins, YouTube) @ CVPR 2019 |

| (PPTX 158MB) | (PPTX 58MB) |

Acknowledgements

GaussiGAN: Thank you to Numair Khan for the dataset generator, and for engaging discussions with Helge Rhodin and Srinath Sridhar. Kwang In Kim was supported by the National Research Foundation of Korea (NRF) grant NRF-2021R1A2C2012195, and we thank an Adobe gift.

Generating Object Stamps: Youssef A. Mejjati thanks the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement No 665992, and the UK’s EPSRC Center for Doctoral Training in Digital Entertainment (CDE), EP/L016540/1. James Tompkin and Kwang In Kim thank gifts from Adobe.

Zip icon adapted from tastic mimetypes by Untergunter, CC BY-NA-SA 3.0 licence.

|

|

|

|

|